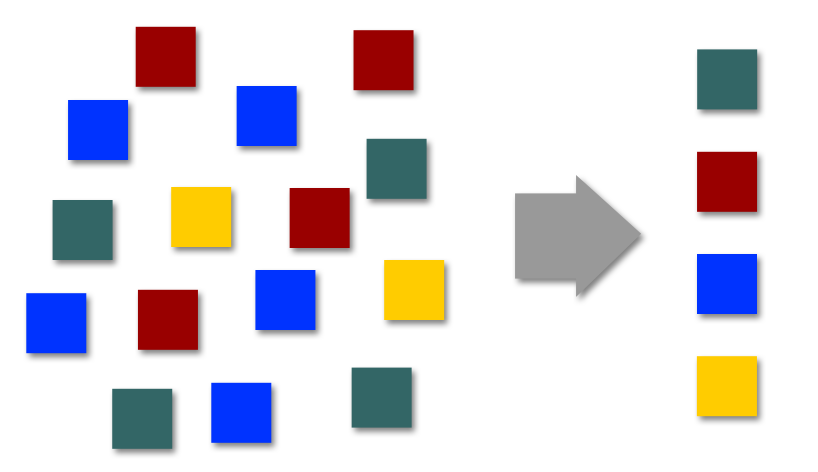

Last week we worked with the City of New Haven, Connecticut, to prepare for and respond to Superstorm Sandy. This blog post is part of a series in which we discuss our experiences and what we learned as we helped New Haven through this crisis. Single events create lots of unfiltered, unsorted data

During a fast-paced emergency response operation, where the speed of incoming information often exceeds validation capabilities, data duplication is inevitable.

Generally speaking, in any given EOC in the midst of a crisis, there are multiple reports on a particular event or action item, such as a downed utility pole or removal of branches from the road. Each of those reports includes overlapping and unique information based on the callers’ perspectives; some of that information is vital to an effective response, some is unnecessary noise, and some may be incorrect. Within all of this information, is a core of essential data that is required in order to trigger the right response and assign the right tasks. Simultaneously, it’s important to know when incoming reports refer to the same events/actions, so that you’re not only launching the appropriate response, but you’re not duplicating responses for the same issue.

Validate data afterward, quickly

But simply trying to eliminate the duplication of data from the get-go isn’t realistic – how can you prevent multiple people from calling in the same problem? In fact, trying to validate or filter out information while you’re collecting it could have negative effects. In particular, during rapid response situations, the initial responders – the operators, dispatchers, and other people taking calls and receiving the first-hand reports – may simply not have enough information to quickly determine if they are fielding a duplicate or inaccurate request or report. And would you want them to have to spend time trying to manually reduce noise and avoid data duplication when their time is meant to be spent taking in as much information from as many people as possible?

Negative space defines focus

In fact, if you’ve got a system that processes, handles, and displays all that data in a smart and efficient way, you’d actually want the noise, anomalies, and inaccuracies; just as negative space on a canvas can define where the viewer should be looking, the total picture of an incident helps understand where in that picture to focus and deploy resources. More specifically, what can be improved is automating validation of incoming data once it’s entered into the system (rather than preemptively, which as noted carries unacceptable risks), and making sure it can be sorted and filtered immediately.

By providing robust sorting and filtering capabilities coupled with data visualization and task/event mapping, potential duplicates can be quickly and easily identified, in turn making the task closure and verification process much more efficient.